When the batch is the size of 1 sample, the learning algorithm known as stochastic gradient descent. When the batch measurement is a couple of sample and fewer than the scale of the training dataset, the learning algorithm is identified as mini-batch gradient descent. This Python code simulates a simplified image classification coaching process. It makes use of an inventory of dictionaries, each containing a picture emoji and a label (“cat” or “canine”), to characterize a dataset. The code iterates by way of this dataset in batches for a specified number of epochs, simulating the method of exhibiting pictures to a mannequin and updating its understanding.

Key Differences:

Conversely, too few epochs may result in underfitting, leaving the mannequin with high error rates. Monitoring metrics like validation loss helps resolve the optimum number—techniques like early stopping can halt coaching when enhancements plateau. Epochs are essential as a end result of ML models not often converge to optimum performance in a single cross.

There is no magic rule for selecting the variety of epochs — this could be a hyperparameter that have to be determined before coaching begins. So if a dataset consists of 1,000 photographs break up into mini-batches of a hundred photographs, it’s going to take 10 iterations to complete a single epoch. Machine studying (ML) can be seen as little more than a sort of synthetic intelligence (AI). As such, it permits computer systems to study from data and make predictions or choices without being explicitly programmed for particular tasks. As they obtain increasingly more knowledge, ML algorithms improve their performance. Thus, the inspiration of ML is in data, which is out there in each structured and unstructured formats.

As An Alternative of feeding the entire dataset directly (which could be memory-intensive or computationally expensive), we divide it into smaller, manageable chunks referred to as batches. In order to explain epochs, we should remind of the basics https://www.simple-accounting.org/ of model coaching. This optimization happens via optimization methodology similar to stochastic gradient descent (SGD) or its variants (e.g., Adam, RMSprop).

What’s An Epoch In Machine Learning?what Is An Epoch In Machine Learning?

A advanced model typically can’t perceive all the patterns with a single move over the information. The whole variety of data factors in a single batch handed by way of the neural networks known as batch size. Epochs and batches are the dynamic duo of ML training—epochs offering the iterative depth, batches the environment friendly breadth. Understanding their variations empowers you to craft robust fashions that be taught effectively without wasting sources. Whether you are an information scientist tweaking hyperparameters or a developer deploying ML apps, mastering these ideas is essential to success. Training for too many can result in overfitting, the place the mannequin memorizes the coaching knowledge however fails on unseen test information.

Instance Workflow For Setting Batch Size And Epochs

- The measurement of a batch should be greater than or equal to one and fewer than or equal to the number of samples in the coaching dataset.

- Ideally, you can begin with a mini-batch dimension that your hardware can help without overloading the memory.

- When the child seems at these 10 photos and learns somewhat bit extra about cats and canines, they’ve completed one “step” of studying.

- Without shuffling, the model would possibly overemphasize sure sequences, leading to biased studying.

As an illustration, when your dataset consists of 1,000 sample units, it indicates that every of the samples has been seen as quickly as by the model in one epoch. This comparability is often summarized as the difference between epochs and iteration, since they determine how usually the mannequin sees information versus how usually it updates weights. Ideally, you can begin with a mini-batch dimension that your hardware can assist without overloading the reminiscence. Then see how it impacts training stability or mannequin accuracy by increasing or lowering it. In federated studying (decentralized ML), batches are local to gadgets, while epochs combination world updates.

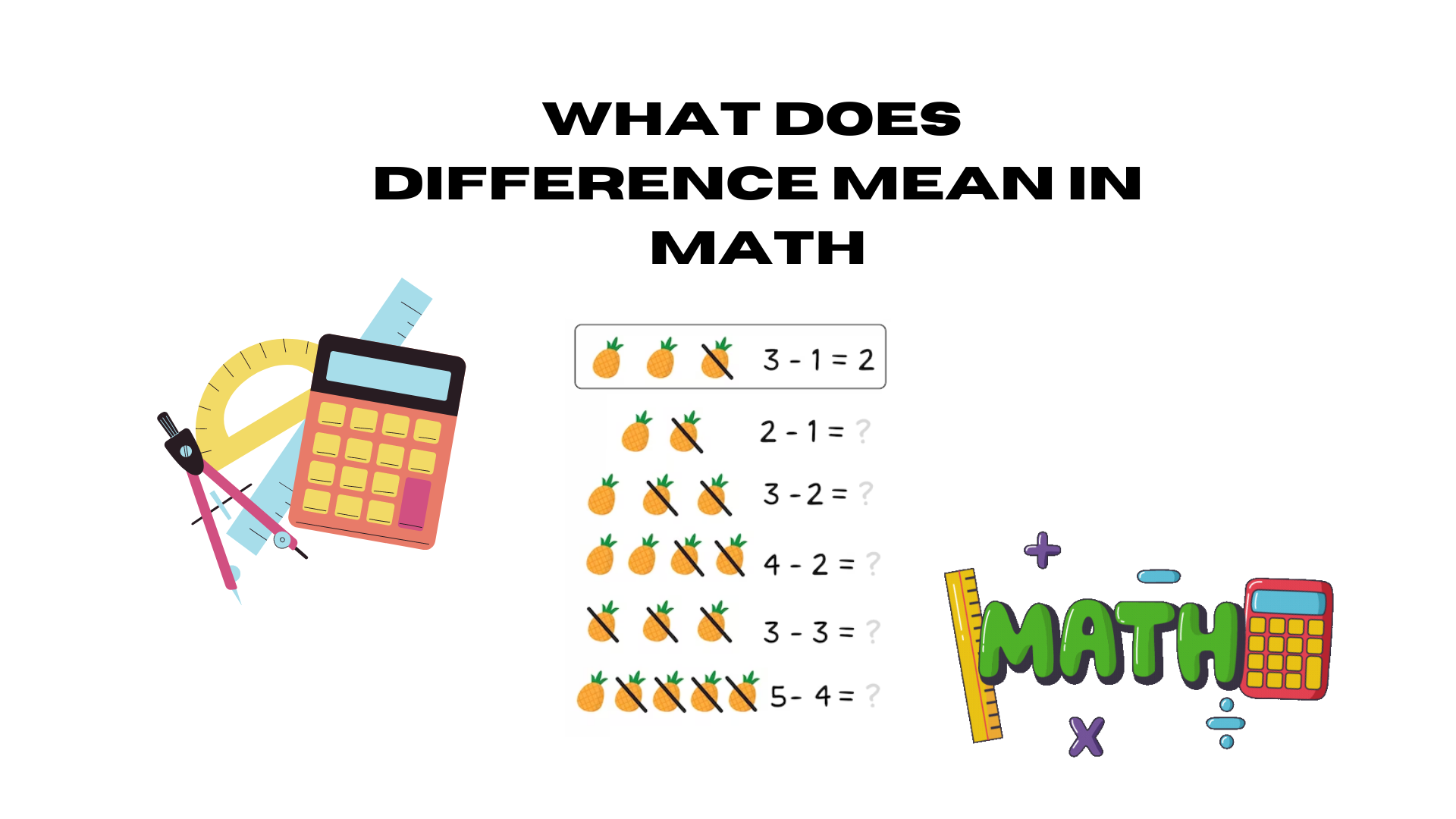

Two hyperparameters that often confuse beginners are the batch dimension and variety of epochs. Learn the means to easily access and visualize your machine learning mannequin training metrics by working TensorBoard on a distant server out of your native machine. This analogy explains machine studying concepts using a baby studying to differentiate cats and canines from a picture book.

The world of machine studying and neural networks may be very difficult to understand as a outcome of its jargon, especially for beginners. Although they’re all related, they symbolize totally different elements of the learning course of. In this blog post, we are going to explain the distinctions between a batch and an epoch; spotlight their importance, in addition to how they affect coaching in neural networks. Understanding them helps you arrange coaching loops correctly, regulate parameters like studying price or batch dimension with confidence and track your model’s progress more clearly.

This analogy reveals the connection between epochs (complete passes through data), batches (chunks of data) and iterations (update steps per chunk). Coaching a model on extra epochs can equally enhance its efficiency. In this fashion, each iteration updates the model’s weights after processing a batch of samples, which helps speed up training. In this submit, you found the distinction between batches and epochs in stochastic gradient descent. The variety of epochs is the number of full passes via the coaching dataset.

Subsequently, we should give the complete dataset through the neural community model greater than as quickly as to make the becoming curve from underfitting to optimal. However it can additionally cause overfitting of the curve if there are extra epochs than needed. It performs a crucial function in the training dynamics of a machine studying model. It affects numerous aspects of the training course of together with computational efficiency, convergence habits and generalization capabilities.

Nonetheless, a batch is a small portion of the training knowledge utilized for updating the weights in the model in a single training episode. Instead of working with the entire data set as a single block, the information set is broken into smaller segments or batches which help in environment friendly computation and likewise helps velocity up convergence. Simply like educating a baby, training a machine studying model entails breaking down the educational course of into smaller, manageable steps. The relationship between steps and epochs helps us understand how many instances the model updates its understanding inside and across these learning cycles. The coaching dynamics of machine studying models are governed by three essential parameters, epochs, iterations and batches. An epoch is one complete cross via the complete coaching dataset, an iteration is a single replace and a batch is the set of samples on which the replace is accomplished.